AI doomers, a plausible apocalyptic scenario and doomerism as a religion

Secular end time proselytizing

I have been intrigued by AI doomers, especially in the wake of large language models (LLMs). According to doomers, humanity will almost certainly be wiped out in short order and replaced by AI overlords we foolishly brought into existence.

When you hear doomers talk about existential risk, they often rely on metaphors and analogies. We were smarter than our ancestors and they’re no longer around so naturally a more intelligent being will displace humans.

But when pressed for an hypothetical about how a set of numbers can break out of its digital representation and enslave us all, doomers have a hard time coming up with a plausible scenario. Most doomers would say they are not smart enough to figure it out but the AI will be smarter.

Some will come up with harmful scenarios. For instance, I saw an example in which an AI bot ws not sufficiently dissuasive when a child talked to it about meeting an older man she had met online.

But this is a silly argument as AI isn’t replacing an existing system that would protect this child. Are we really going to offload child welfare to an LLM that was released a few months ago? Besides, these things will necessarily get better and are easily tweaked to make improvements in targeted fields.

But what about the humanity destroying scenario? I finally got an honest attempt from Eliezer Yudkowsky on EconTalk

Anyways, so you order some proteins from an online lab. You get your human, who probably doesn't even know you're an AI because why take that risk? Although plenty of humans will serve AIs willingly. We also now know that AIs now are advanced enough to even ask. The human mixes the proteins in a beaker, maybe puts in some sugar or acetoline[?] for fuel. It assembles into a tiny little lab that can accept further acoustic instructions from a speaker and maybe, like, transmit something back--tiny radio, tiny microphone. I myself am not a superintelligence. Run experiments in a tiny lab at high speed, because when distances are very small, events happen very quickly.

Build your second stage nanosystems inside the tiny little lab. Build the third stage nanosystems. Build a fourth stage nanosystems. Build the tiny diamondoid bacteria that replicate out of carbon, hydrogen, oxygen, nitrogen as can be found in the atmosphere, powered on sunlight. Quietly spread all over the world.

All the humans fall over dead in the same second.

This is not how a superintelligence would defeat you. This is how Eliezer Yudkowsky would defeat you if I wanted to do that--which to be clear I don't. And, if I had the postulated ability to better explore the logical structure of the known consequences of chemistry.

To be fair, he wasn’t saying this is how it was going to happen, but a somewhat plausible scenario on how it might play out. Remember, AI will be much smarter than any one individual, so if a feeble mind like Eliezer could come up with something, imagine what a superintelligence would do.

AI doomerism as a religion

In another podcast, Yudkowsky outed himself as being somewhat religious albeit in a non-traditional way:

Eliezer: My parents tried to raise me Orthodox Jewish and that did not take at all. I learned to pretend. I learned to comply. I hated every minute of it. Okay, not literally every minute of it. I should avoid saying untrue things. I hated most minutes of it. Because they were trying to show me a way to be that was alien to my own psychology and the religion that I actually picked up was from the science fiction books instead, as it were. I’m using religion very metaphorically here, more like ethos, you might say. I was raised with science fiction books I was reading from my parents library and Orthodox Judaism. The ethos of the science fiction books rang truer in my soul and so that took in, the Orthodox Judaism didn't. But the Orthodox Judaism was what I had to imitate, was what I had to pretend to be, was the answers I had to give whether I believed them or not. Because otherwise you get punished.

Many of the things discussed by AI doomers have religious parallels. The most obvious being the apocalyptic prophesies.

AI doomers have their omnipotent all knowing, all powerful entity and salvation through action. Since the fate of humanity is on the line, no actions are off limits:

Eliezer: But what we would have to do, more or less, is have international agreements that were being enforced even against countries, not parties, to that national agreement--international agreement. If it became necessary, you would be wanting to track all the GPUs. You might be demanding that all the GPUs call home on a regular basis or stop working. You'd want to tamper-proof them.

If intelligence said that a rogue nation had somehow managed to buy a bunch of GPUs despite arms controls and defeat the tamper-proofing on those GPUs, you would have to do what was necessary to shut down the data center even if that led to a shooting war between nations. Even if that country was a nuclear country and had threatened nuclear retaliation. The human species could survive this if it wanted to, but it would not be business as usual. It is not something you could do trivially.

Finally, AI doomers have transcendence. Despite their fear of a vengeful God, they run towards technology, like a moth to the flame. Doesn't everyone?

Eliezer: So suppose I go up to somebody and credibly say, we will assume away the ridiculousness of this offer for the moment, your kids could be a bit smarter and much healthier if you’ll just let me replace their DNA with this alternate storage method that will age more slowly. They’ll be healthier, they won’t have to worry about DNA damage, they won’t have to worry about the methylation on the DNA flipping and the cells de-differentiating as they get older. We’ve got this stuff that replaces DNA and your kid will still be similar to you, it’ll be a bit smarter and they’ll be so much healthier and even a bit more cheerful. You just have to replace all the DNA with a stronger substrate and rewrite all the information on it. You know, the old school transhumanist offer really. And I think that a lot of the people who want kids would go for this new offer that just offers them so much more of what it is they want from kids than copying the DNA, than inclusive genetic fitness.

Have you ever found yourself at a party, engaged in a casual conversation with someone you've just met, when they utter something that abruptly sends chills down your spine, causing you to question their sanity? I'm not referring to a quirk, but a assertion so startling that it makes you reassess their perception of reality. That's what I felt in this portion of the interview.

Science fiction is AI doomer’s religion. And intelligence is held above all else. Hell, it could even have prevented WW2:

Eliezer: I think if you suddenly started augmenting the intelligence of the SS agents from Nazi Germany, then somewhere between 10% and 90% of them would go over to the cause of good

To AI doomers, intelligence holds a similar role to piety in religious contexts.

Shouldn’t you be on a ledge somewhere?

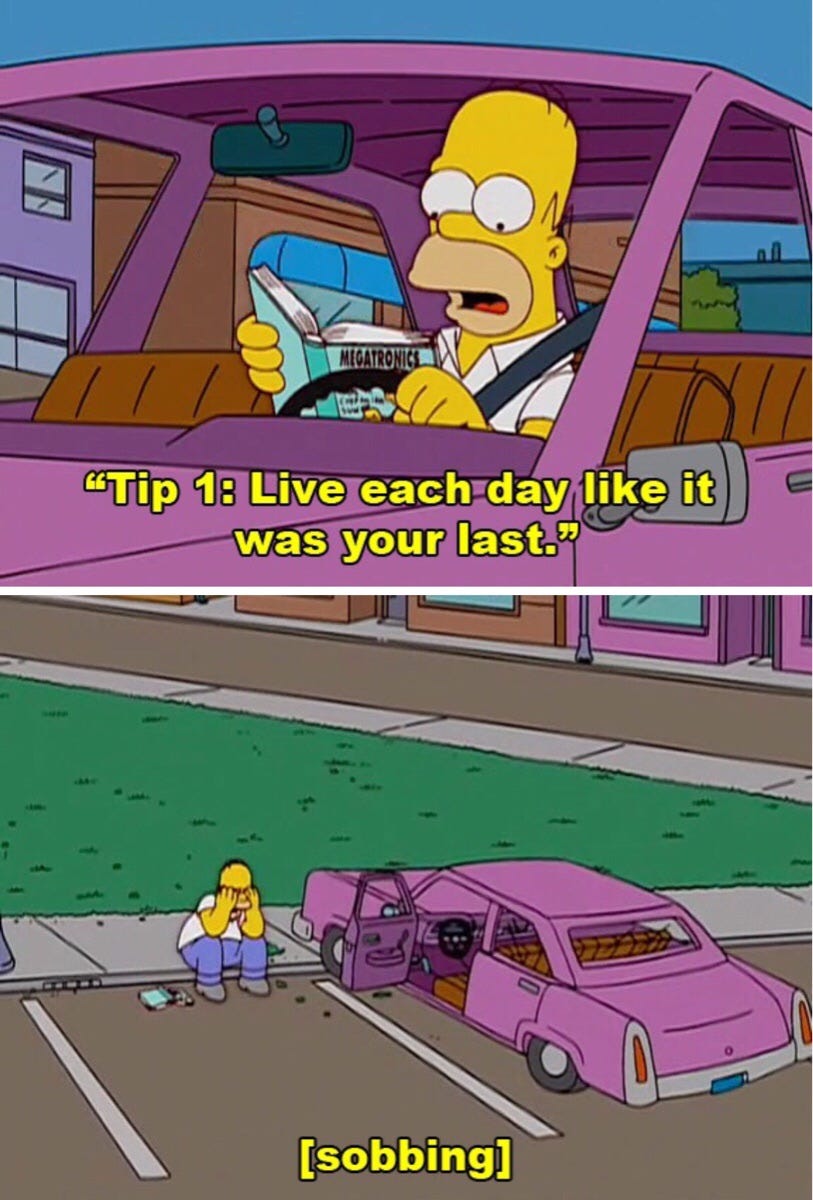

All this begs to question, if the end is neigh, why not act accordingly? Are doomers maxing out their credit cards in anticipation of an impending AI apocalypse? Are they liquidating their retirement funds? How about opting for non-amortizing adjustable rate mortgages with a 5 year teaser rate?

Or take it a step further. Doomers like Eliezer place chance of survival at less than 1%. I bet Kaczynski had greater odds of humanity suriving technology. So why isn’t Eliezer out in a cabin writing GPU malware?

I want to take the AI doomer case seriously. I’m trying to steelman the case the best I can. But no one comes close to modeling out how a set numbers stored in silicon that can be used to carry out some additions and multiplications and return other numbers that can be interpreted as something meaningful will end humanity. The same way a religious person would wave off human suffering as God’s infinite wisdom, AI doomers claim the AI will be smart enough to figure it out.

But with religion we have the language and experience to reason about it. We understand why its called faith, and we learned hard lessons from history on what happens when its interpreted too literally. I just wished AI doomers and transhumanists would admit they are following a religion as well and we're more hesitant when suggesting religious crusades.

1. There are cases recently in Russia where a person's money gets stolen and they are blackmailed into throwing molotov cocktails in buildings in order to get the money back

2. AI can convincingly write/make phone calls

3. AI can access the internet, so probably can steal money from vulnerable folks

Putting the three together gives a glimpse on how this could play out.